Testing on a small emailing list with A/B tests

by Edward Touw

It’s important for you to tweak and test your emailings until you’ve come up with the perfect document. But how to do so if you have a small emailing list where split-run emailings have little meaning?

If you have a small emailing list, let’s say less than 5,000 recipients, split-run emailings have little use. After all, the test groups are so small that it’s impossible to create a representative sample group, making the results unreliable.

But that doesn’t mean it’s impossible to test on a small emailing list.

A/B test

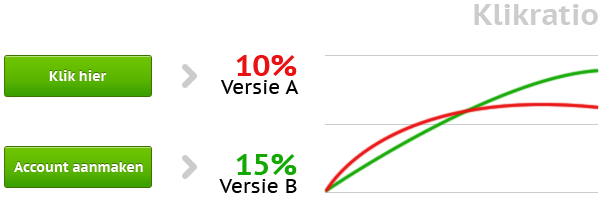

In the world of email marketing split-run and A/B test are often used in the same context. Contrary to popular belief however, there is a difference between the two.

- When conducting a split-run test, you send two versions of the same emailing to a small part of the send list. The best performing version of the two will then be sent to the rest of the group.

- With an A/B test, you try out version A first, and send out version B a week later for example. Comparing the results of these mailings, you decide which version to use towards your target group.

As mentioned earlier, when it comes to small emailing lists, a split-run mailing is less likely to give you reliable results. A/B tests however are a suitable alternative.

Create similar circumstances

If you use A/B tests to compare two different versions of emailings, it is of course of great importance to create similar circumstances.To give you an example:

- You send version A on a Monday, resulting in a 55 percent open rate

- You send version B on a Tuesday, resulting in 75 percent open rate

While one version might have scored better than the other, you still don’t know if it was document B that caused the difference, or that Tuesday is just a better day to send an email than a Monday.

Email editor

Email editor Follow-up manager

Follow-up manager Push notifications

Push notifications SMS module

SMS module Layered database

Layered database Native integrations

Native integrations Testing mailings

Testing mailings REST API/Webhooks

REST API/Webhooks Website forms

Website forms Website crawler

Website crawler Coupons

Coupons